Lorenzo Mur and Carlos Plou are part of the Robotics and Computer Vision and Artificial Intelligence group at the I3A (the Aragon Engineering Research Institute of the University of Zaragoza). Their scientific work consists of automatically interpreting and analysing complex scenes using advanced computer vision and deep learning (artificial intelligence) techniques. One possible application is in assistive devices that help those with vision problems or those who have lost their vision completely.

His work was awarded at the International Conference ‘Computer Vision and Pattern Recognition’, held in Seattle, the most relevant event in this field.

Anticipating the future

Lorenzo Mur researches into egocentric vision methods, as if the person were wearing a camera in their glasses. He develops Artificial Intelligence methods that ‘understand’ as well as possible what the person is doing, where and what the objects around them are, what they are going to do next... Thus, ‘by identifying where the objects are, we can highlight their silhouette within the blind person's implant,’ he explains.

His work complements that of Carlos Plou. He is more focused on the recognition of actions within videos, using event cameras or language models. In his research he uses safe and reliable AI methods. A known problem with ChatGPT and others like it ‘is that it always gives an answer and if it doesn't know the answer, it makes it up. With these methods we make it so that if it doesn't know the answer it says, I don't know, or it's possible that it does, but I'm not entirely sure.

Where are the keys?

One of the challenges the researchers faced could be applied to a scene in a movie, for example, ‘Ok Google, go to the scene where the dragon burns down the city’. But, travelling into the future, ‘wearing glasses that record or collect everything we do on a day-to-day basis, we could ask our AI model where I left my house keys. The model would play the role of ‘mother’, reminding us where we last left them,’ the two young researchers stress.

One of the goals of the I3A research group is to develop an AI assistant for the elderly or visually impaired that can be as easy to wear as glasses. Through a camera in the frame and by processing all the information, the AI understands what the person is doing and where they are. The methods being developed would, in the long term, mean that if a person leaves the hob on, the system would warn them to avoid a fire.

First and second prize in Seattle

At the Computer Vision and Pattern Recognition conference in Seattle this summer, they competed to create an AI model that, given a video and a description, would find the part of the video that corresponds to that description. ‘Our AI system tells you where in the video each action takes place,’ they say. So, for example, it could apply to a scene in a movie, ‘Ok Google, go to the scene where the dragon burns down the city’. But, travelling into the future, ‘wearing glasses that record or collect everything we do on a daily basis, we could ask our AI model where I left my house keys. The model would remind us ‘where we last left them’, the two young researchers stress. The proposal led them to win the first prize.

Lorenzo Mur also won second prize for his work, which involved building a model to predict the next action that was going to happen in a video, before having seen it. The aim of the I3A research group is to have an AI system mounted on the glasses themselves. Through a camera in the frame and processing all the information, the AI understands what the person is doing and where they are. With the action anticipation method, this would mean that, if an operator fixing an electrical panel had a fault, the system would warn him or her to avoid a short circuit.

Achieving these awards at the largest international conference on AI and Computer Vision is an important step for young researchers who are facing the pains of PhD life. ‘The current competition in AI research, where it is almost impossible to compete with the big companies like Meta, Google, Amazon... Undoubtedly, receiving an award like this is a motivational boost,’ says Lorenzo Mur.

For Carlos Plou, it was his first experience at this congress and ‘it helped me to realise the impact of our research group. Working day to day, you don't realise the impact of your professors, but when you go to an event of this magnitude, and people know perfectly well where Zaragoza is and ask you about one of your professors, you are aware of the group you are part of’.

Destination Milan: European Computer Vision Conference

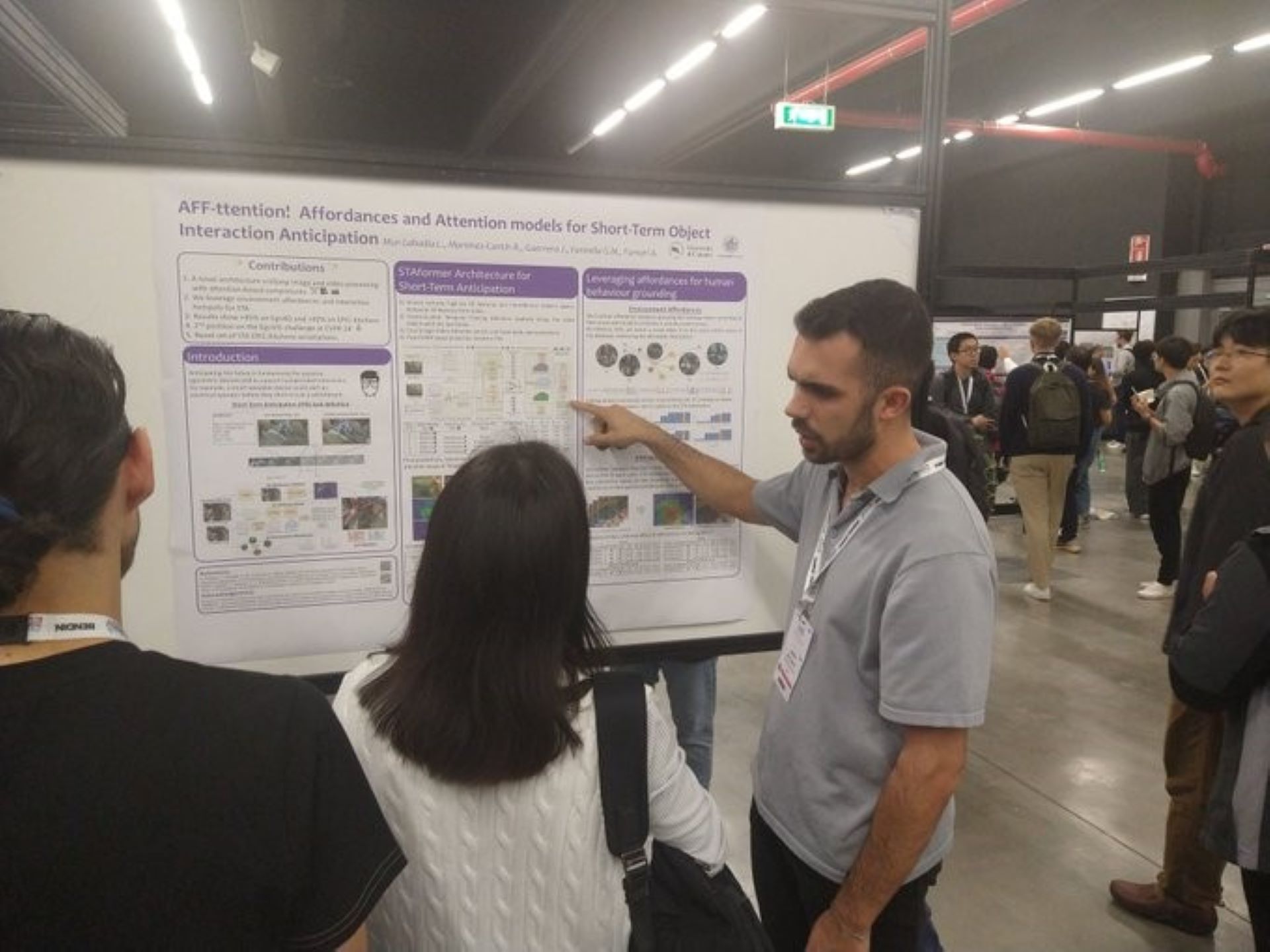

They are currently participating in the European Conference on Computer Vision (ECCV), the second largest international event on computer vision and AI.

Lorenzo Mur presents the work for which he won 2nd prize at the CVPR in collaboration with the University of Catania, Italy.

And Carlos Plou, will present a work on action recognition during sleep in collaboration with Bitbrain and another project to automatically create drone shows, ‘by writing the shape you want to generate, with a model very similar to the one behind ChatGPT in the task of generating images’, says this young engineer. A work he is developing together with another young researcher, Pablo Pueyo, with the support of Stanford University.